Tool 1: Tools Related to the Recommended Framework

See the following Community Tool Box sections for additional information.

Engage Stakeholders

To help you understand who stakeholders are:

Understanding Community Leadership, Evaluators, and Funders: What Are Their Interests?

Identifying Targets and Agents of Change: Who Can Benefit and Who Can Help

Identifying Community Assets and Resources

To learn how to involve stakeholders:

Involving Key Influentials in the Initiative

Involving People Most Affected by the Problem

To work together with a diverse group:

Training for Conflict Resolution

Encouraging Involvement of Potential Opponents as well as Allies

Describe the Program

To fully understand the need, problem, or goal that the program addresses:

Defining and Analyzing the Problem

Conducting Needs Assessment Surveys

Conducting Public Forums and Listening Sessions

To better explain the activities, components, and elements of the program:

Designing Community Interventions

Identifying Strategies and Tactics for Reducing Risks

To be able to describe resources and assets for the program:

Identifying Community Assets and Resources

For examples of logic models:

Our Model of Practice: Building Capacity for Community and System Change

Our Evaluation Model: Evaluating Comprehensive Community Initiatives

To look broadly at your program and its context:

An Overview of Strategic Planning or "VMOSA" (Vision, Mission, Objectives, Strategies, Action Plan)

Understanding and Describing the Community

To modify the program to fit the local context:

Adapting Community Interventions for Different Cultures and Communities

To learn to explain the program to others (and so that they see your point of view):

Focus the Evaluation Design

To clarify the purpose:

To identify potential users and uses:

Understanding Community Leadership, Evaluators, and Funders: What Are Their Interests?

To clarify evaluation questions:

For illustrative evaluation questions:

Our Model of Practice: Building Capacity for Community and System Change

For help identifying specific evaluation methods:

Some Methods for Evaluating Comprehensive Community Initiatives

Developing Baseline Measures of Behavior

Obtaining Feedback from Constituents: What Changes are Important and Feasible?

For guidance about making agreements:

Identifying Action Steps in Bringing About Community and System Change

Gather Credible Evidence

For support in implementing specific evaluation methods:

Some Methods for Evaluating Comprehensive Community Initiatives

Developing Baseline Measures of Behavior

Obtaining Feedback from Constituents: What Changes are Important and Feasible?

Justify Conclusions

To see an illustrative process for considering evidence:

Our Model of Practice: Building Capacity for Community and System Change

Ensure use and share lessons learned

To promote the use of what your organization has learned:

Providing Feedback to Improve the Initiative

Conducting a Social Marketing Campaign

Attracting Support for Specific Programs

To share what you have learned with diverse groups:

Making Community Presentations

Communications to Promote Interest

Tool 2: Evaluation Standards

Use this table to determine how well your evaluation met "good" standards for evaluation.

| Standard | Did the evaluation meet this standard? (Yes or No) | Comments: |

| Utility Standards | ||

| 1. Stakeholder Identification | ||

| 2. Evaluator Credibility | ||

| 3. Information Scope and Selection | ||

| 4. Values Identification | ||

| 5. Report Clarity | ||

| 6. Report Timeliness and Dissemination | ||

| 7. Evaluation Impact | ||

| Feasibility Standards | ||

| 1. Practical Procedures | ||

| 2. Political Viability | ||

| 3. Cost Effectiveness | ||

| Propriety Standards | ||

| 1. Service Orientation | ||

| 2. Formal Agreements | ||

| 3. Rights of Human Subjects | ||

| 4. Human Interactions | ||

| 5. Complete and Fair Assessment | ||

| 6. Disclosure of Findings | ||

| 7. Conflict of Interest | ||

| 8. Fiscal Responsibility | ||

| Accuracy Standards | ||

| 1. Program Documentation | ||

| 2. Context Analysis | ||

| 3. Described Purposes and Procedures | ||

| 4. Defensible Information Sources | ||

| 5. Valid Information | ||

| 6. Reliable Information | ||

| 7. Systematic Information | ||

| 8. Analysis of Quantitative Information | ||

| 9. Analysis of Qualitative Information | ||

| 10. Justified Conclusions | ||

| 11. Impartial Reporting | ||

| 12. Metaevaluation |

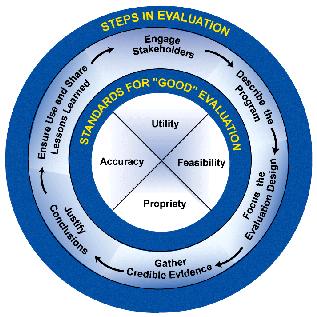

Tool 3: Steps in Evaluation Practice and the Most Relevant Standards

Codes following each standard designate the conceptual group and number of the standard. U = Utility; F = Feasibility; P = Propriety; A = Accuracy.

| Steps | Most Relevant Standards |

| Engage Stakeholders | Metaevaluation (A12) Stakeholder identification (U1) Evaluator Credibility (U2) Formal agreements (P2) Rights of human subjects (P3) Human Interactions (P4) Conflict of interest (P7) |

| Describe the program | Complete and fair assessment (P5) Program documentation (A1) Context analysis (A2) |

| Focus the evaluation design | Evaluation impact (U7) Practical procedures (F1) Political viability (F2) Cost effectiveness (F3) Service orientation (P1) Complete and fair assessment (P5) Fiscal responsibility (P8) Described purposes and procedures (A3) |

| Gather credible evidence | Information scope and selection (U3) Defensible information sources (A4) Valid information (A5) Reliable information (A6) Systematic information (A7) |

| Justify conclusions | Values identification (U4) Analysis of quantitative information (A8) Analysis of qualitative information (A9) Justified conclusions (A10) |

| Ensure use and share lessons learned | Evaluator credibility (U2) Report clarity (U5) Report timeliness and dissemination (U6) Evaluation impact (U7) Disclosure of findings (P6) Impartial reporting (A11) |